Skill is All You Need: Lessons from Building Marketing Agents at Noumena

Noumena has built an AI-native Growth Intelligence system that applies AI to bring greater certainty to commercial seeding and conversion, enabling brands to fundamentally reinvent growth.

Part 1: The Problem

1.1 Workflows Hit a Wall

Agent engineering begins with an architectural choice: decompose tasks into fixed pipelines, or let the model navigate an open execution space? Canvas-based builders like n8n and Dify work well for deterministic problems—linear logic, clear input-output boundaries, stable processes. Report generation, data cleaning, anything where a handful of workflows can be reused indefinitely. Here, engineering focuses on reliability and control.

When Noumena applied this approach to marketing, the abstraction broke down. The challenge isn't complexity, it's that decisions depend on context that never stops shifting.

- A campaign report shows ten million influencer views. The CMO sees brand momentum and approves more budget. The content team sees a 70% drop-off in three seconds and rewrites the hook. The media buyer sees cost-per-click and kills the campaign. Same data, incompatible conclusions—a linear workflow cannot reconcile disagreement about what success means.

- The definition of "good" moves constantly. High acquisition costs during launch are tuition; the same numbers in steady-state are a crisis. A meme converts on Monday and embarrasses the brand by Friday. Hardcoded rules will always chase targets that have already moved.

- Industry context compounds this. Lipstick sells on impulse and influencer urgency. Apply that to wealth management and you'll fail—trust-building and compliance operate on different timescales. Enterprise brands protect reputation; startups optimize for survival. No universal playbook exists.

These dynamics break traditional workflows. Workflows assume enumerable problems and fast feedback loops. Marketing feedback is slow, attribution is noisy, and tuning costs escalate with complexity. Teams add branches to cover edge cases, but the system grows harder to maintain without becoming more general.

This led Noumena to a core design principle: marketing problems shouldn't be frozen into fixed pipelines. The system needs goal-level flexibility—dynamic decisions within constraints, rather than one optimal path assumed to work forever.

1.2 The Template Drawback

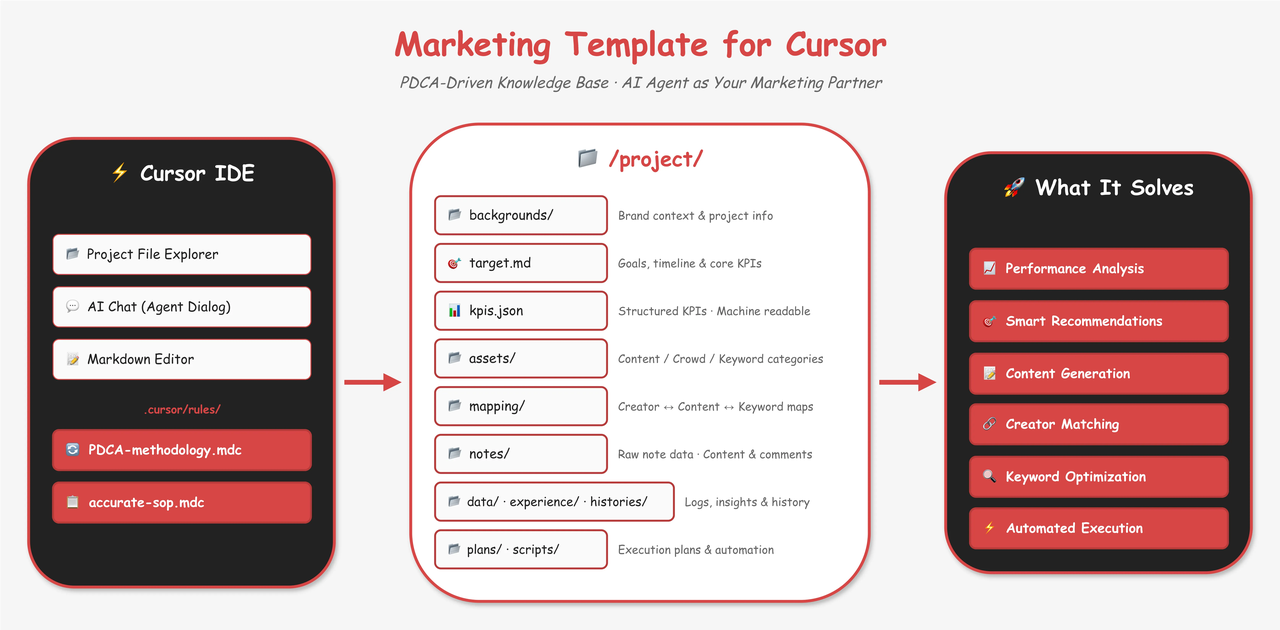

To address the generalization challenge, Noumena experimented with file-system-as-memory: using directory structures to store and reuse expert knowledge for marketing agents.

The idea came from Cursor's .mdc files, which define persistent rules loaded as context at runtime to constrain model behavior. We adapted this mechanism to encode marketing logic rather than code conventions, building a structured directory that separated data, tools, processes, and accumulated expertise. Each workflow got its own .mdc file with query-based trigger rules.

Unlike traditional workflows, these files guided thinking rather than dictating execution. We injected methodology, not process: "When analyzing ROI decline, check macro trends before creative performance." "Given tools A, B, and C, here's how to combine them." The agent received a map and a toolbox, but decided how to navigate.

This approach produced comprehensive outputs. Given the prompt "analyze my campaign results," the system generated detailed reviews across multiple dimensions by combining our context with available tools.

But limitations emerged quickly. An .mdc file is static context—once loaded, its assumptions are treated as permanently valid. As industries shift, campaign phases change, and business scales evolve, templates stop fitting. Richer templates meant more complex trigger rules, which increased context dependence and reduced adaptability.

Static templates addressing dynamic problems is a structural mismatch. This approach loosened rigid workflows but didn't solve the underlying issue: marketing decisions happen in environments that change constantly. The system needed the ability to update its own context, not just load it once at startup.

1.3 From Template to Skill

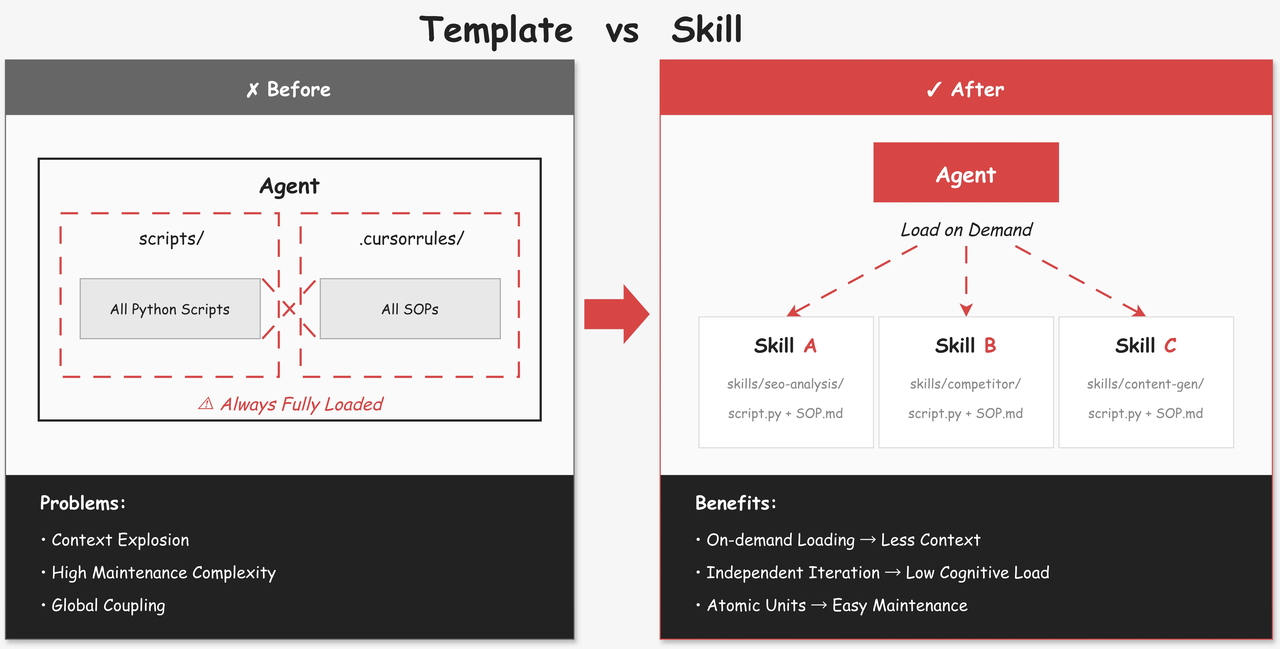

When Anthropic released Claude Skills, the architecture aligned directly with the constraints we were facing.

The core shift is capabilities load at runtime rather than upfront. Each skill solves one well-defined problem and enters context only when the agent determines it's needed. The system no longer carries every possible rule from the start. Context grows incrementally as decisions unfold, significantly reducing token pressure.

Skills exist as independent folders, enabling natural decomposition of complex logic. Unlike centralized scripts or global rules, each skill encapsulates the tool descriptions, reasoning constraints, and execution guidance required for a specific problem. Complex systems evolve into composable capability units rather than monolithic sprawl.

This modularity transforms maintenance. Modifying a Skill requires understanding only its own behavior and boundaries, not the entire agent architecture. Domain experts can iterate on specific problems without grasping the full system, making capability updates a local, controllable engineering task.

Part 2: Core Architecture

2.1 Anthropic's Ecosystem Perspective

When building our Skill system, we started with Anthropic's official framework. In “Don't Build Agents, Build Skills Instead” Skills are layered by source and generality:

Foundational Skills come from official teams and provide general capabilities like document processing and code execution. Third-Party Skills are built by ecosystem partners to connect external SaaS tools—Notion workspace search, Browserbase automation. Enterprise Skills are internal constructs that encode organization-specific processes and business logic.

This taxonomy clarifies ownership and allows general, ecosystem, and proprietary capabilities to evolve independently. But from an engineering perspective, it addresses capability sourcing without covering business logic. In complex tasks, having available Skills isn't enough. The agent must still decide when to invoke which capabilities and how to organize reasoning and state across multiple calls.

Based on this observation, we introduced a decision-oriented layer above the source taxonomy to govern how Skills combine and sequence.

2.2 Noumena's Cognitive Layering

The Noumena layering emerged from a simple observation: marketing involves long-tail problems across different functional roles. For brand insight reports alone, every strategist might have several distinct approaches. Handling nonlinear, context-sensitive tasks meant restructuring the system around the agent's decision process: an OS Agent managing runtime environment and state, Atomic Skills providing deterministic execution, and Thinkflow Skills handling task decomposition and path selection.

OS Agent sits at the foundation. Its job isn't executing business logic but providing stable runtime infrastructure and system calls. It manages command-line tools, script interpreters, and file I/O while maintaining a structured workspace. Intermediate results and outputs persist as files in this directory. The benefit is direct: offloading state from context to filesystem reduces token pressure and enables task recovery after interruption. Working memory lives in readable, writable file structures rather than one-time context injection.

Atomic Skills layer above, providing deterministic execution with clear boundaries. Each Atomic Skill implements scripts with well-defined inputs and stable output structures. They don't participate in complex decisions or perceive business context. They complete single, verifiable actions: data retrieval, content processing, structured writes. This layer serves as the agent's execution unit, guaranteeing stability so higher-level reasoning doesn't repeatedly handle low-level uncertainty.

Thinkflow Skills carry the real complexity. A Thinkflow isn't a simple process script but a structured representation of expert thinking. It decomposes high-level goals into staged judgments and decides at runtime which Atomic Skills to invoke and how to evaluate intermediate results. When loading a Thinkflow, the agent first reads its capability scope and preconditions, then checks current context. Missing information triggers a pause and backtrack to retrieve inputs. With context satisfied, Thinkflows dynamically select analysis paths based on user intent and task emphasis rather than following predetermined sequences.

This cognitive layering addresses the fundamental problem of workflows that freeze paths at design time. Atomic Skills provide stable, reusable execution. Thinkflows determine when and in what order to combine them. Path selection happens at runtime, not design time, allowing agents to adjust decisions within constraints as context evolves. This mirrors how experts actually work through problems.

Part 3: Orchestrating Thinkflows

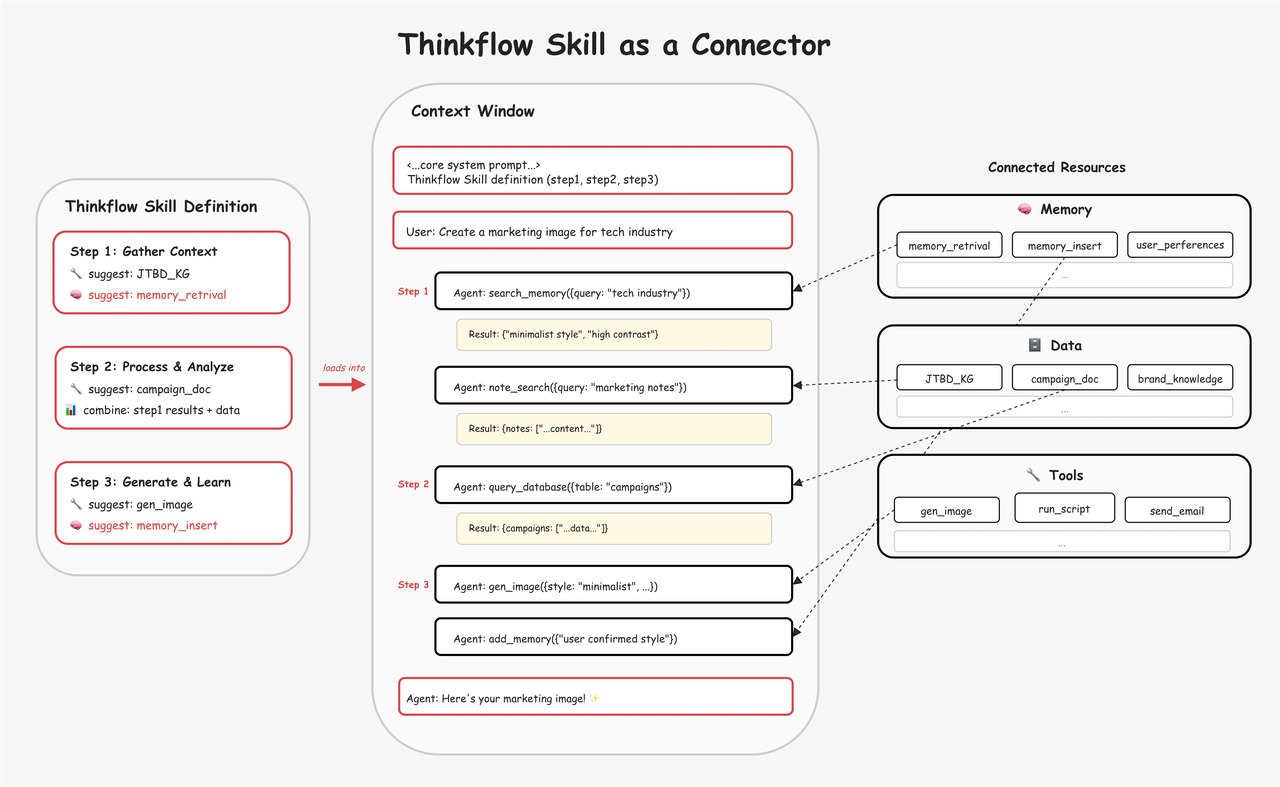

Returning to architectural essence: Thinkflow isn't merely a file storing logic. It's the critical middle layer connecting user intent to underlying capabilities, governing context and bridging all resources—code fragments, business data, user memory.

3.1 SKILL.md as Router

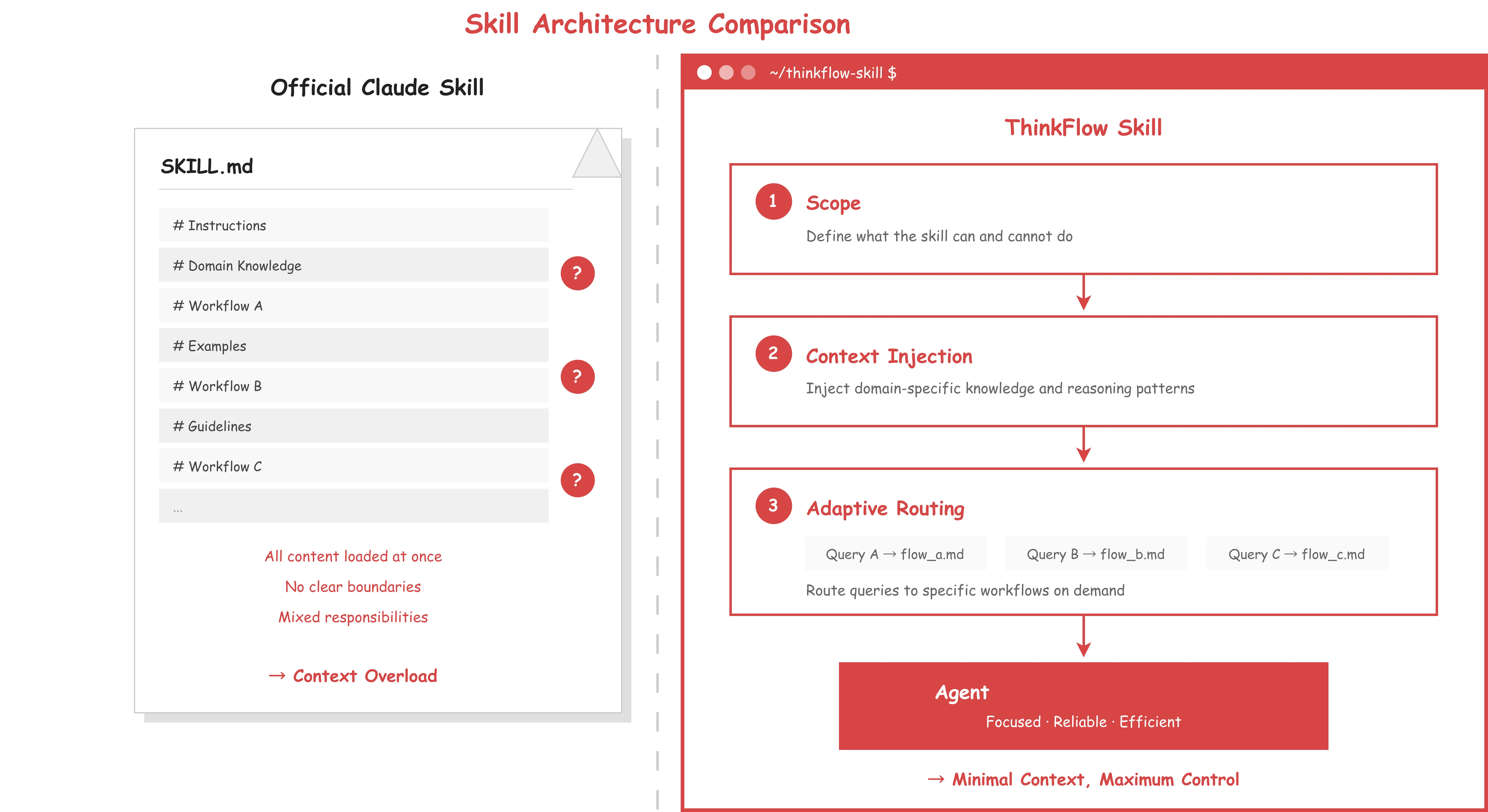

In Anthropic's original definition, a skill resembles passive documentation. As problem complexity increases, this form tends toward two extremes: unstable behavior from insufficient specification, or performance degradation from excessive context added to cover more cases.

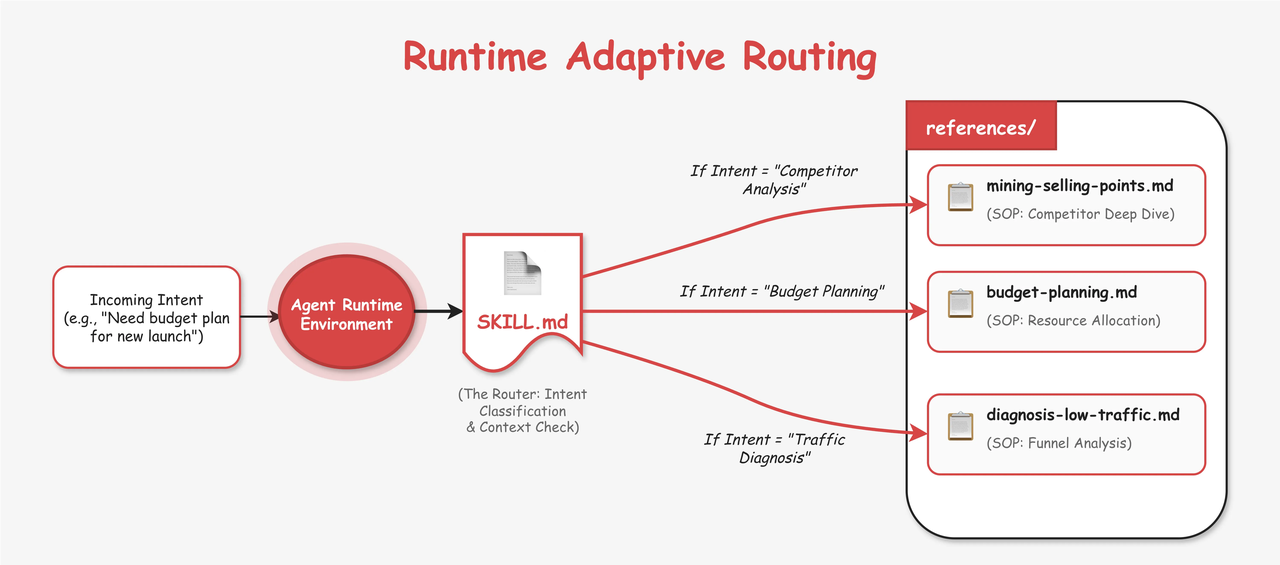

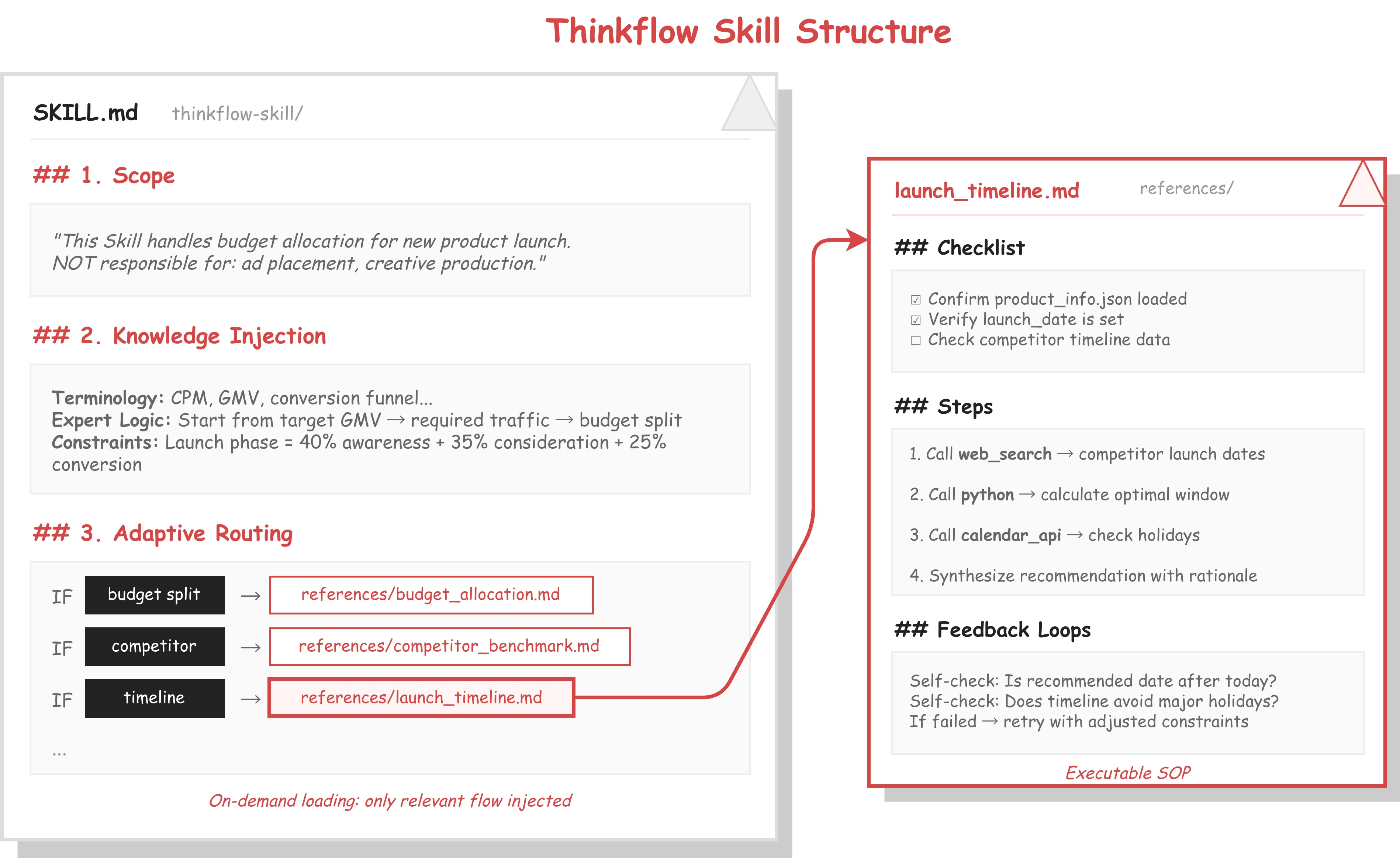

We designed Thinkflow Skills with stronger constraints, positioning SKILL.md as a routing gateway. Three dimensions channel divergent reasoning while preserving agent autonomy:

Scope opens every Thinkflow SKILL.md by defining explicit boundaries to prevent hallucination. "This Skill handles budget allocation for new product launches only; it does not execute ad placement operations." The agent knows precisely what it can and cannot do.

Knowledge Injection follows scope, embedding domain-specific background that ensures the model understands specialized terminology and the reasoning patterns experts typically apply.

Adaptive Routing comes last, enumerating common query types and mapping each to a corresponding thinkflow.md file in the references directory. Detailed processes don't appear in SKILL.md itself. Irrelevant workflows never enter context.

3.2 References as SOP

If SKILL.md decides which path to take, reference files define how to walk it. The goal isn't abstract methodology but converting expert tacit knowledge into executable standard operating procedures.

Each reference file contains three structures:

Checklist specifies preconditions the agent must verify before execution. "Confirm campaign_data.csv is loaded and contains yesterday's spend field."

Steps define concrete execution, serving as the interface where Thinkflows invoke lower-level capabilities. Each step can call other Skills, enabling composition. Step 1 might invoke web_deep_research to gather competitor content. Step 2 calls Python to calculate engagement rate averages. Step 3 synthesizes findings into conclusions.

Feedback Loops establish self-verification mechanisms for logical consistency. "Does the calculated total engagement equal likes plus saves plus comments? If not, review the Python code."

3.3 One Interface, All Context

Thinkflow naturally serves as connector between capabilities and data. Reading databases, executing scripts, writing to memory are all instances of the same action class. Thinkflow doesn't implement these capabilities directly but dispatches underlying tools through natural language instructions at appropriate moments, leaving parameter extraction and invocation decisions to the agent.

A step might instruct the model to query our maintained knowledge graph via the JTBD_KG tool based on user input. A later step might have it synthesize prior results, extract parameters, and call an image generation endpoint. This pattern unifies tool abstraction. Workflow orchestration no longer requires extensive explicit coding—Thinkflow handles it at runtime. Because execution paths unfold incrementally, relevant context loads on demand, avoiding the bloat of upfront injection.

Memory integration becomes equally natural within this structure. Through standardized memory read and write capabilities, Thinkflows actively retrieve historical experience during execution and update memory based on user feedback.

In practice, identical workflows often produce feedback that diverges completely across users. Skill-level memory management allows the agent to maintain process consistency while delivering outputs aligned with individual preferences. Before calling a script, the agent might retrieve memories of the user's industry context. After receiving feedback, it updates memory with a new understanding of that user's editorial preferences.

Part 4: Lessons to Learn

4.1 Limitations

While building marketing agents at Noumena, several constraints emerged, stemming not from implementation bugs but from fundamental tensions around model capabilities, architectural complexity, and framework dependencies.

Nesting depth caps at three levels in practice. When Thinkflows call Atomic Skills, models lose track of top-level objectives, causing tasks to derail midstream.

Cost versus quality presents an unavoidable tradeoff. Running production marketing workflows on Noumena's skill platform, we found that no model currently offers both low cost and stable complex orchestration. Cheaper models in demanding Thinkflow scenarios produce invocation failures and unstable structured outputs. Architecture alone cannot resolve this; it requires continuous calibration between operational stability and long-term economics.

Framework dependency introduces debugging opacity. Our reliance on Claude Agent SDK accelerated initial development but obscures failure modes. When Skills fail to trigger or routing misfires, middleware encapsulation makes root cause diagnosis difficult through conventional methods.

These constraints mean Skill architecture isn't infinitely scalable. Structural flexibility demands ongoing attention to granularity, model selection, and framework evolution.

4.2 Experience of Building Skills

Minimize ad-hoc code. Early Atomic Skills lacked robustness, forcing models to write temporary Python for edge cases. Hallucination rates spiked as generated code consumed context rapidly, diluting attention. Worse, bypassing purpose-built skills meant skipping reinforcement logic, causing errors to cascade until users couldn't identify where hallucination began.

Invest in data governance. Users expect agents to extract insights from messy raw data, but output quality correlates directly with input quality. We invested heavily in governance tooling to surface value hidden in raw inputs.

Enable low-code iteration through Skill DevOps. Domain experts need to build skills without coding. Applying test-driven development principles, we created two specialized agents: Skill Builder guides experts through dialogue to extract business logic into standardized file structures. Skill Evolver takes problem-solution pairs, converts them into evaluation rubrics, then runs execute-diagnose-repair cycles, autonomously fixing Skill descriptions or code.

This creates a file-based reinforcement learning loop. Experts serve as reward models defining success; Builder and Evolver serve as policy optimizers refining Skills. Organizations achieve continuous capability evolution without model training costs.

Less Workflows, More Skills

The arc of Noumena's development moved from rigid workflows to modular skill architecture. By decoupling execution logic from cognitive models and infrastructure, we addressed context bloat and reasoning instability in complex business scenarios. Domain experts now iterate on logic directly through structured skill files, with automated evaluation ensuring quality.

We believe the engineering focus for future agents will shift from model parameters to governance of procedural knowledge. Product moats will depend on converting expert reasoning into reusable, verifiable skill libraries. As model capabilities evolve, the agents that deliver real value won't be those with the most elaborate workflows, but those with the deepest skill libraries.